|

MyCaffe

1.12.2.41

Deep learning software for Windows C# programmers.

|

|

MyCaffe

1.12.2.41

Deep learning software for Windows C# programmers.

|

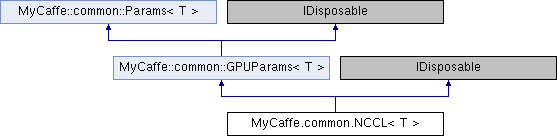

The NCCL class manages the multi-GPU operations using the low-level NCCL functionality provided by the low-level Cuda Dnn DLL. More...

Public Member Functions | |

| NCCL (CudaDnn< T > cuda, Log log, Solver< T > root_solver, int nDeviceID, long hNccl, List< ManualResetEvent > rgGradientReadyEvents) | |

| The NCCL constructor. More... | |

| new void | Dispose () |

| Release all GPU and Host resources used. More... | |

| void | Broadcast () |

| Broadcast the data to all other solvers participating in the multi-GPU session. More... | |

| void | Run (List< int > rgGpus, int nIterationOverride=-1) |

| Run the root Solver and coordinate with all other Solver's participating in the multi-GPU training. More... | |

Public Member Functions inherited from MyCaffe.common.GPUParams< T > Public Member Functions inherited from MyCaffe.common.GPUParams< T > | |

| GPUParams (CudaDnn< T > cuda, Log log, Solver< T > root_solver, int nDeviceID) | |

| The GPUParams constructor. More... | |

| void | Dispose () |

| Release all GPU and Host resources used. More... | |

| void | SynchronizeStream () |

| Synchronize with the Cuda stream. More... | |

| void | Configure (Solver< T > solver) |

| Configure the GPU Params by copying the Solver training Net parameters into the data and diff buffers. More... | |

| void | apply_buffers (BlobCollection< T > rgBlobs, long hBuffer, long lTotalSize, Op op) |

| Transfer between the data/diff buffers and a collection of Blobs (e.g. the learnable parameters). More... | |

Public Member Functions inherited from MyCaffe.common.Params< T > Public Member Functions inherited from MyCaffe.common.Params< T > | |

| Params (Solver< T > root_solver) | |

| The Param constructor. More... | |

Additional Inherited Members | |

Protected Attributes inherited from MyCaffe.common.GPUParams< T > Protected Attributes inherited from MyCaffe.common.GPUParams< T > | |

| CudaDnn< T > | m_cuda |

| The instance of CudaDnn that provides the connection to Cuda. More... | |

| Log | m_log |

| The Log used for output. More... | |

| long | m_hStream |

| The handle to the Cuda stream used for synchronization. More... | |

Protected Attributes inherited from MyCaffe.common.Params< T > Protected Attributes inherited from MyCaffe.common.Params< T > | |

| long | m_lCount |

| size of the buffers (in items). More... | |

| long | m_lExtra |

| size of the padding added to the memory buffers. More... | |

| long | m_hData |

| Handle to GPU memory containing the Net parameters. More... | |

| long | m_hDiff |

| Handle to GPU memory containing the Net gradient. More... | |

| int | m_nDeviceID |

| The Device ID. More... | |

Properties inherited from MyCaffe.common.Params< T > Properties inherited from MyCaffe.common.Params< T > | |

| long | count [get] |

| Returns the size of the buffers (in items). More... | |

| long | data [get] |

| Returns the handle to the GPU memory containing the Net parameters. More... | |

| long | diff [get] |

| Returns the handle to the GPU memory containing the Net gradients. More... | |

The NCCL class manages the multi-GPU operations using the low-level NCCL functionality provided by the low-level Cuda Dnn DLL.

NVIDIA's NCCL 'Nickel' is an NVIDIA library designed to optimize communication between multiple GPUs.

When using multi-GPU training, it is highly recommended to only train on TCC enabled drivers, otherwise driver timeouts may occur on large models.

| T | Specifies the base type float or double. Using float is recommended to conserve GPU memory. |

Definition at line 266 of file Parallel.cs.

| MyCaffe.common.NCCL< T >.NCCL | ( | CudaDnn< T > | cuda, |

| Log | log, | ||

| Solver< T > | root_solver, | ||

| int | nDeviceID, | ||

| long | hNccl, | ||

| List< ManualResetEvent > | rgGradientReadyEvents | ||

| ) |

The NCCL constructor.

| cuda | Specifies the CudaDnn connection to Cuda. |

| log | Specifies the Log for output. |

| root_solver | Specifies the root Solver. |

| nDeviceID | Specifies the device ID to use for this instance. |

| hNccl | Specifies the handle to NCCL created using CudaDnn::CreateNCCL, or 0 for the root solver as this is set-up in NCCL::Run. |

| rgGradientReadyEvents | Specifies a list of events used to synchronize with the other Solvers. |

Definition at line 282 of file Parallel.cs.

| void MyCaffe.common.NCCL< T >.Broadcast | ( | ) |

Broadcast the data to all other solvers participating in the multi-GPU session.

Definition at line 313 of file Parallel.cs.

| new void MyCaffe.common.NCCL< T >.Dispose | ( | ) |

Release all GPU and Host resources used.

Definition at line 299 of file Parallel.cs.

| void MyCaffe.common.NCCL< T >.Run | ( | List< int > | rgGpus, |

| int | nIterationOverride = -1 |

||

| ) |

Run the root Solver and coordinate with all other Solver's participating in the multi-GPU training.

IMPORTANT: When performing multi-GPU training only GPU's that DO NOT have a monitor connected can be used. Using the GPU with the monitor connected will cause an error. However, you can use GPU's that are configured in either TCC mode or WDM mode, BUT, all GPU's participating must be configured to use the same mode, otherwise you may experience upredictable behavior, the NVIDIA driver may crash, or you may experience the infamouse BSOD ("Blue Screen of Death") - so as the saying goes, "Beware to all who enter here..."

| rgGpus | Specifies all GPU ID's to use. |

| nIterationOverride | Optionally, specifies a training iteration override to use. |

Definition at line 351 of file Parallel.cs.