This tutorial will guide you through the steps to create and train a Temporal Fusion Transformer, based on [1] and [2] to predict retail demand flows.

Database Preparation

For this tutorial, you will need to download and install the free developer edition of SQL Server from Microsoft.

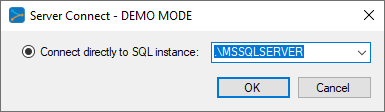

Once installed, when running the SignalPop AI Designer the application will prompts you for the SQL instance to use – select the .\MSSQLSERVER instance.

Note, if you have already created a DNN database for SQL Server Express, you will need to select a different directory when creating the DNN database for SQL Server Developer edition.

Step 1 – Download the Raw Data

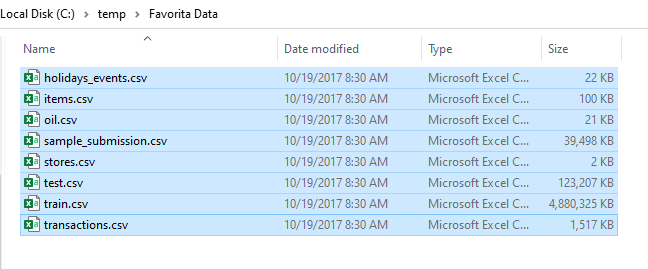

In the first step, you must download the 8 raw data from the Kaggle Favorita Dataset. Once downloaded and extracted, you will have the following raw CSV files.

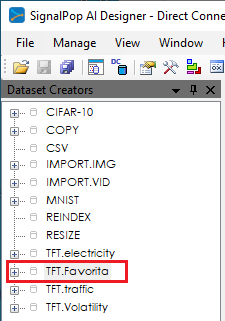

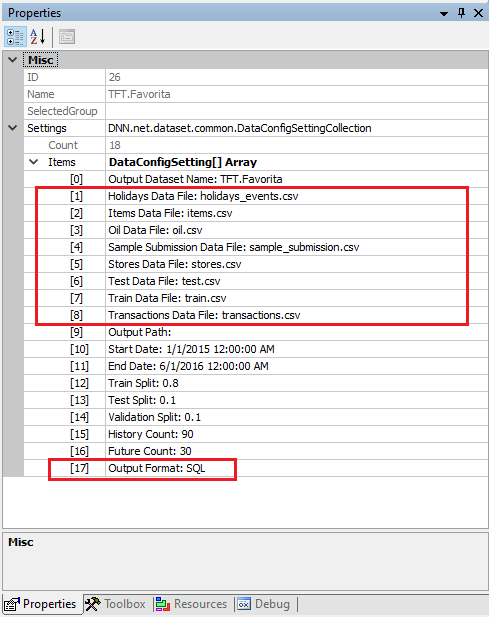

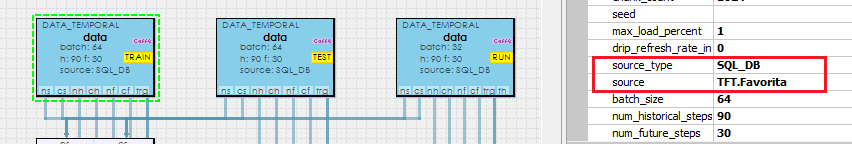

Using the SignalPop AI Designer’s Favorita Dataset Creator, we will load these raw data files into SQL or SQL Express. From the Dataset Creators pane, double click on the TFT.Favorita dataset creator.

Double clicking on this dataset creator will open the dataset creator window and properties.

Add the paths to the 8 raw data files to the properties shown above and select the SQL output format. Next select the Run ((![]() ) button from the tool bar menu.

) button from the tool bar menu.

This process loads all raw data from the files, organizes and preprocesses the data and then stores the data into the TFT.Favorita DataSet within the SQL database. Loading the database can take some time, but luckily you only need to do this process once.

Step 2 – Create the Temporal Fusion Transformer Model

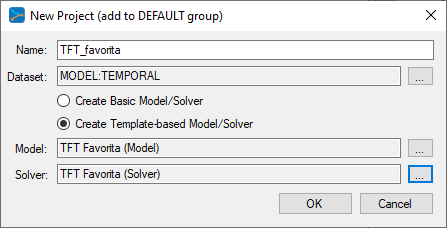

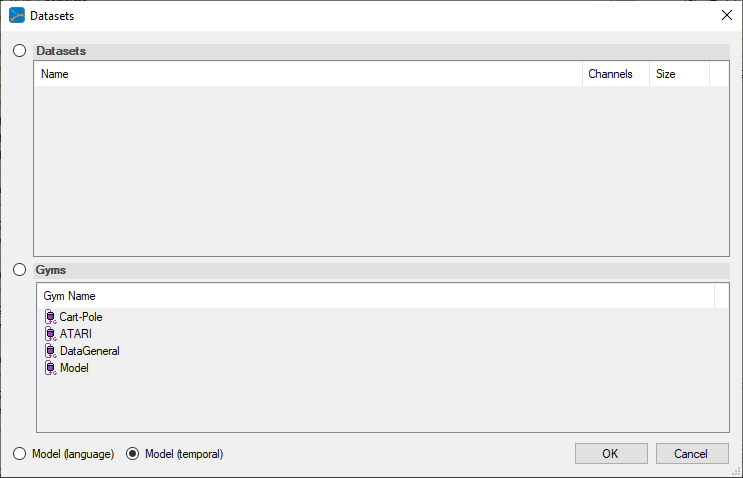

Next, we need to create the TFT_favorita project that uses the MODEL (temporal) based dataset. MODEL (temporal) based datasets rely on the model itself to collect the data used for training.

First select the Add Project ((![]() ) button at the bottom of the Solutions window, which will display the New Project dialog.

) button at the bottom of the Solutions window, which will display the New Project dialog.

To select the MODEL (temporal) dataset, click on the ‘…’ button to the right of the Dataset field and select the MODEL (temporal) radio button at the bottom of the dialog.

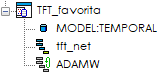

Upon selecting OK on the New Project dialog, the new TFT_favorita project will be displayed in the Solutions window.

Step 3 – Review the Model

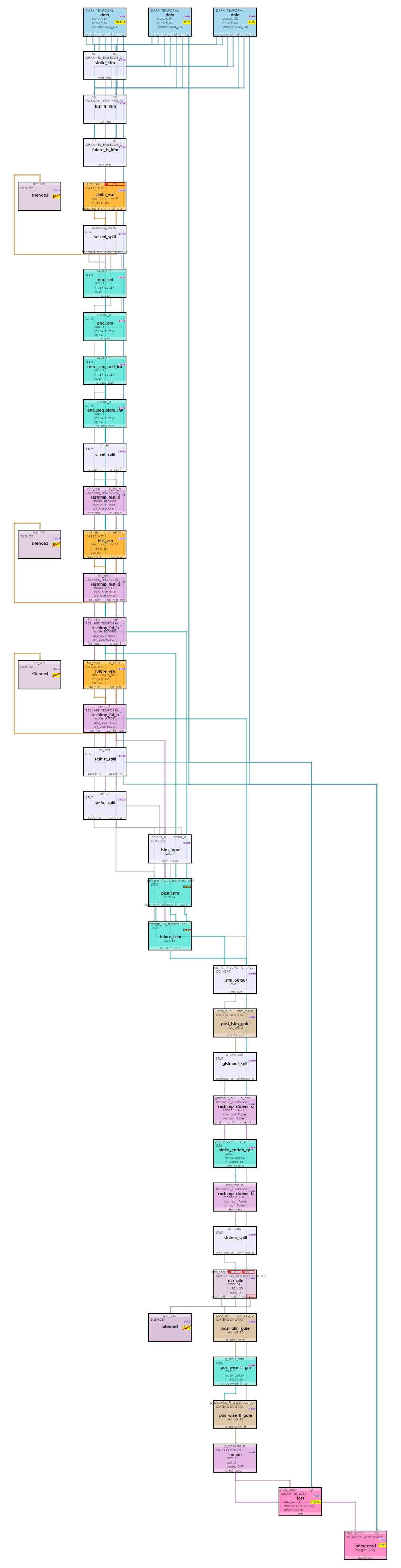

Double click on the tft_net model name within the TFT_favorita project to display the model editor, allowing you to revie the model. As you can see below, the TFT models are quite complex.

The TFT model used to predict retail demand flows usage uses 90 historical steps and predicts 30 future steps. For data inputs, the model takes the following inputs.

Static Numeric – none

Static Categorical – 9, shape = { B, 1 }

ItemNum (STATIC, CATEGORICAL) StoreNum (STATIC, CATEGORICAL) Store City ID (STATIC, CATEGORICAL) Store State ID (STATIC, CATEGORICAL) Store Type ID (STATIC, CATEGORICAL) Store Cluster (STATIC, CATEGORICAL) Item Family ID (STATIC, CATEGORICAL) Item Class ID (STATIC, CATEGORICAL) Item Perishable (STATIC, CATEGORICAL)

Historical Numeric – 6, shape = { B, 90, 1 }

Log Unit Sales (OBSERVED, NUMERIC) Oil Price (OBSERVED, NUMERIC) Transactions (OBSERVED, NUMERIC) Day of Week (KNOWN, NUMERIC) Day of Month (KNOWN, NUMERIC) Month (KNOWN, NUMERIC)

Historical Categorical – 4, shape = { B, 90, 1 }

Open (KNOWN, CATEGORICAL) On Promotion (KNOWN, CATEGORICAL) Holiday Type (KNOWN, CATEGORICAL) Holiday Locale ID (KNOWN, CATEGORICAL)

Future Numeric – 3, shape = { B, 30, 1 }

Day of Week (KNOWN, NUMERIC) Day of Month (KNOWN, NUMERIC) Month (KNOWN, NUMERIC)

Future Categorical – 4, shape = { B, 30, 1 }

Open (KNOWN, CATEGORICAL) On Promotion (KNOWN, CATEGORICAL) Holiday Type (KNOWN, CATEGORICAL) Holiday Locale ID (KNOWN, CATEGORICAL)

Note, the Favorita dataset is large and requires around 70 GB of RAM when fully loaded. However, once loaded into memory, training commences quickly for there is no lag caused by loading data from disk. To help alleviate data loading times, the dataset may be loaded into the MyCaffe In-Memory Temporal Database which holds the data in memory until unloaded.

Each data layer connects to the temporal dataset specified by the source property within each DataTemporal Layer.

Step 4 – Training

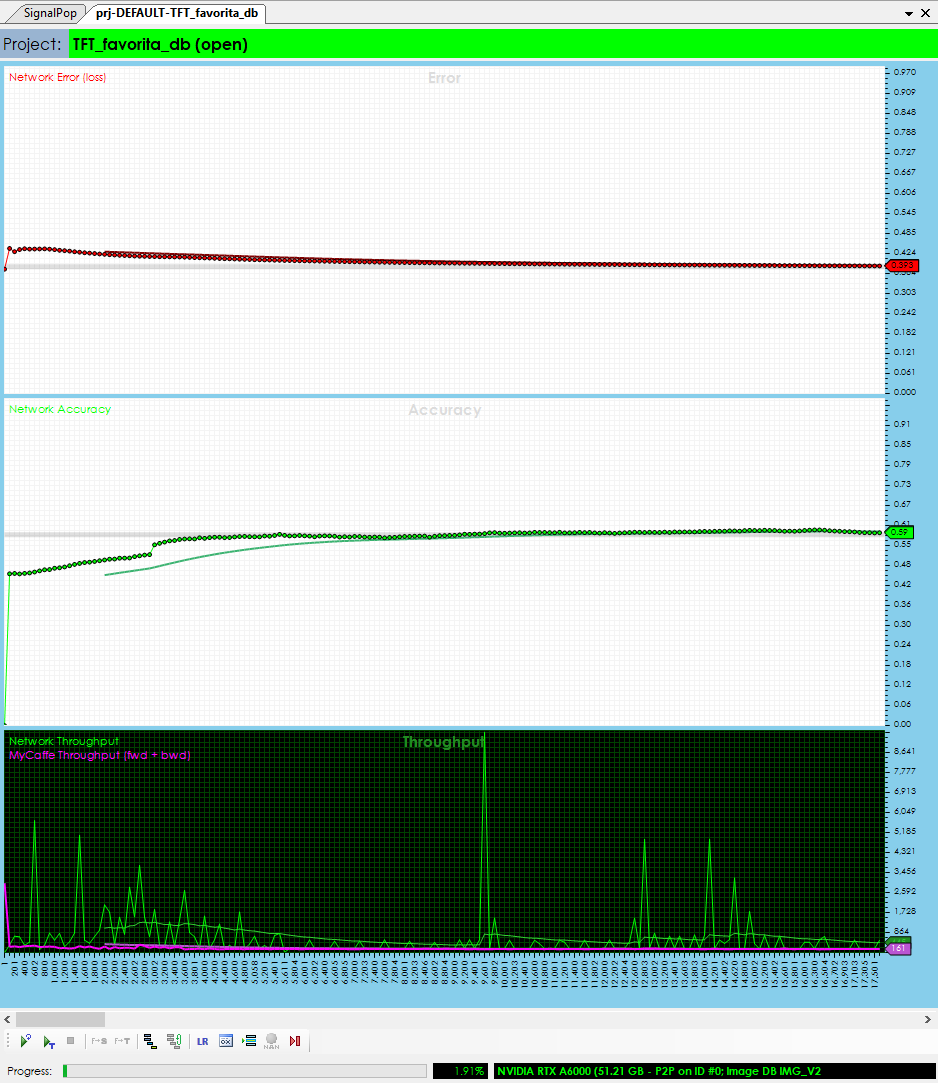

The training step uses the ADAMW solver but with no weight decay (the ADAM may be used as well) and a base learning rate of 0.001, momentum = 0.9 and momentum2 = 0.999.

We trained on an NVIDIA RTX A6000 with a batch size of 64; historical steps = 90 and future steps = 30. With these settings, the model uses a little over 4GB of the RTX A6000 GPU memory.

To start training, first open the project by right clicking on the TFT_favorita project and selecting the ‘Open’ menu option. Next, double click on the TFT_favorita project name to open the project window and select the Run Training ((![]() ) button.

) button.

We trained the model for around 20,000 iterations.

Step 5 – Analyze the Results

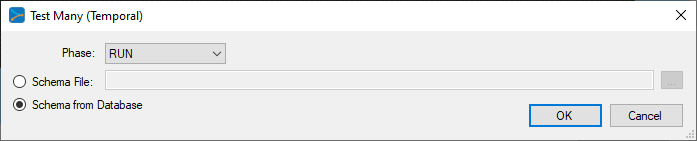

After training for around 20,000 iterations or so, stop the training, check the ‘Test Many’ radio button at the bottom middle of the Project window. Next, select the Run Testing ((![]() ) button which will then display the Test Many dialog.

) button which will then display the Test Many dialog.

Select the Schema from Database radio button to load the data layout used to determine which variables are associated with the weights withing the VSN layers.

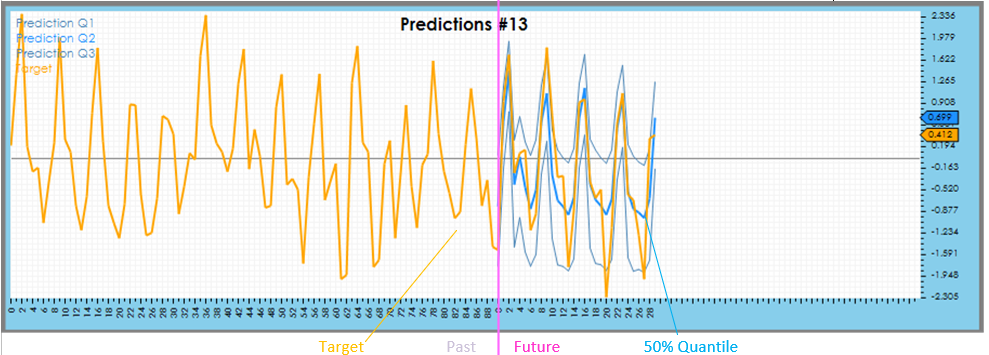

After pressing OK, the analytics are displayed showing several predictions and how they match up with the target data.

Predicted quantiles are shown in blue for the future portion only, whereas the target data is shown in orange.

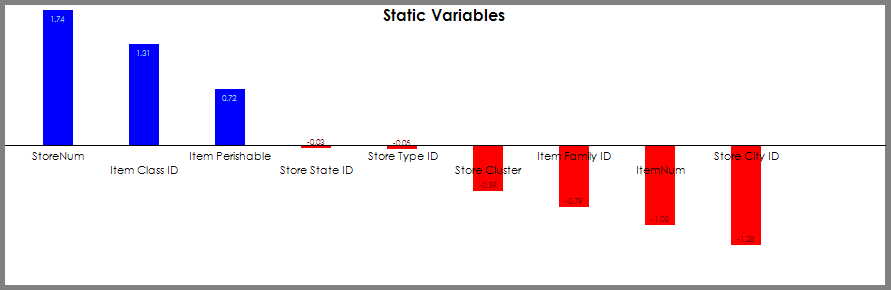

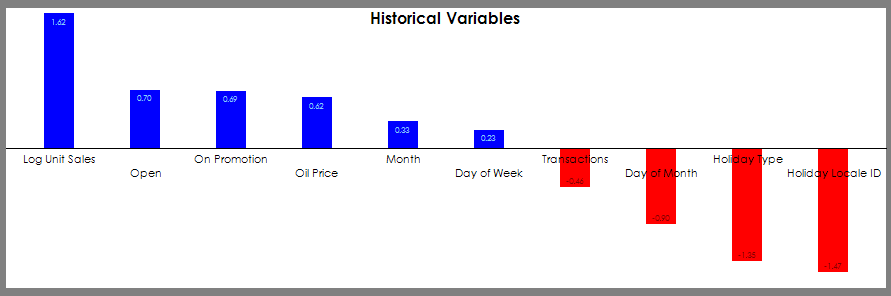

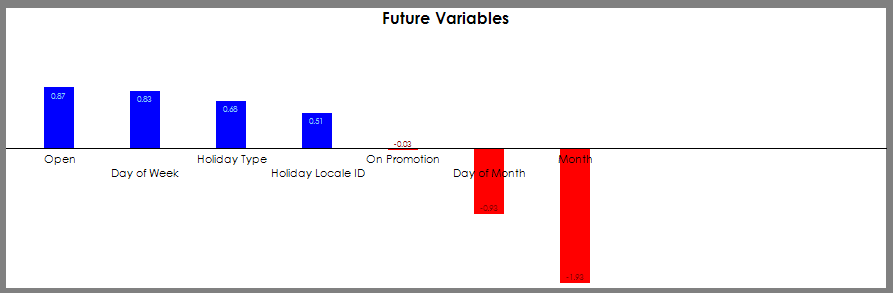

After the prediction data you will find the variable analysis from each of the three Variable Selection Networks.

Analyzing the impact of the static variables shows that the Store Number and Item Class are have the highest impact on the predictions.

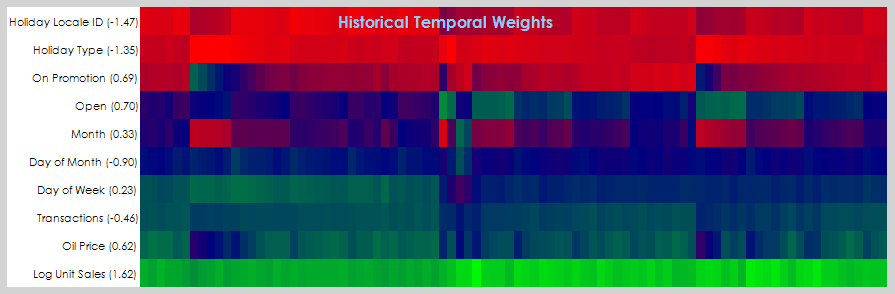

Next, looking at the historical values, we see that the Log Unit Sales, Store Open, On Promotion and price of Oil had the highest impact on the predictions.

The future variables show that both the day of the week and holidays play a strong role in the predictions made.

The temporal variable analysis looks at the temporal weightings for each variable observed on a randomly selected input. This analysis can show a periodicity in the data and also give indications on when a given variable contributed to the predictions over time.

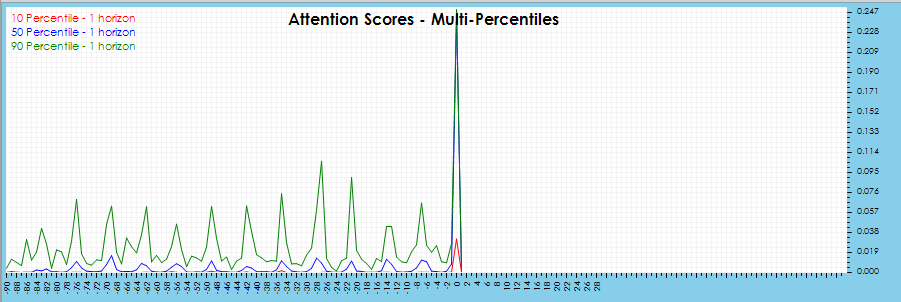

And finally, analyzing the attention scores indicates a fairly strong 7 days cycle which is most likely contributed to weekends.

Congratulations! You have now created and trained your first TFT model to predict retail demand flows using MyCaffe!

[1] Bryan Lim, Sercan O. Arik, Nicolas Loeff, and Tomas Pfister, Temporal Fusion Transformers for Interpretable Multi-horizon Time Series Prediction, 2019, arXiv:1912.09360.

[2] GitHub: PlaytikaOSS/tft-torch, by Playtika Research, 2021, GitHub