In our latest release of the MyCaffe AI Platform, version 1.12.2.41, we now support Liquid Neural Networks as described in [1], [2], [3] and [4].

Liquid neural networks, first introduced by [1], are dynamic networks constructed “of linear first-order dynamical systems modulated via nonlinear interlinked gates,” resulting in models that “represent dynamical systems with varying (i.e., liquid) time-constants coupled to their hidden state, with outputs being computed by numerical differential equation solvers.”

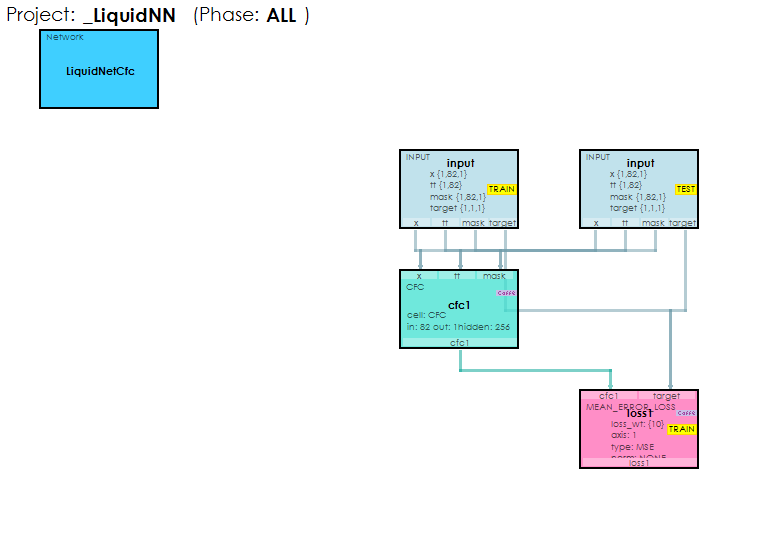

The Liquid Neural Network is implemented in the new CFC Layer which supports both the CFC Cell and LTC Cell internal layers.

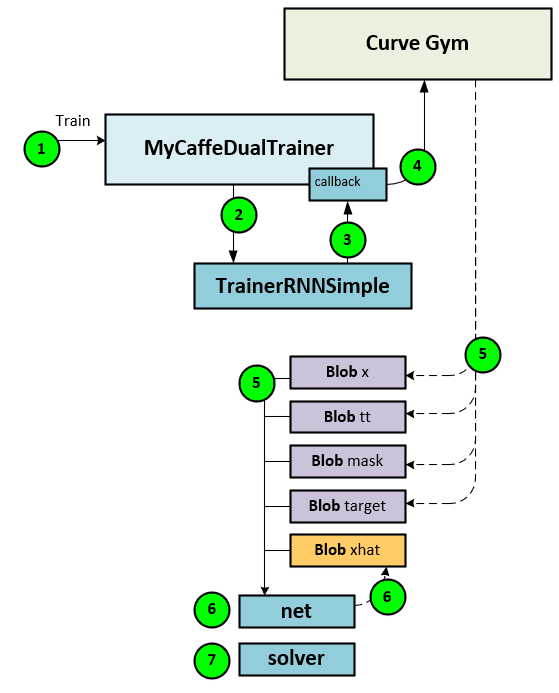

Using the new Curve Gym, data from a dynamically generated Sine curve is sent to the MyCaffe Liquid Neural Network which learns the curve over time.

This training process is run iteratively as the curve progresses thus allowing the model to learn the Sine curve over time.

To try out a Liquid Neural Network in MyCaffe for yourself, check out the new Liquid Neural Network tutorial.

Temporal Fusion Transformer Models

In addition, in this release we have added new improvements and bug fixes to the MyCaffe Temporal Fusion Transformer models based on [5] and [6]. MyCaffe now supports a new in-memory temporal database MyCaffeTemporalDatabase that stores all temporal data in memory for faster training times. The new Favorita sample uses the in-memory temporal database during training to learn retail demand flows across a number of retail stores.

The Favorita dataset is a complex dataset comprising nearly 20 data streams of data that are all ‘fused’ together by the Temporal Fusion Transformer model to predict the demand flows across a large number of retail products. The following data streams are in the Favorita dataset.

Static Numeric – none

Static Categorical – 9, shape = { B, 1 }

ItemNum (STATIC, CATEGORICAL) StoreNum (STATIC, CATEGORICAL) Store City ID (STATIC, CATEGORICAL) Store State ID (STATIC, CATEGORICAL) Store Type ID (STATIC, CATEGORICAL) Store Cluster (STATIC, CATEGORICAL) Item Family ID (STATIC, CATEGORICAL) Item Class ID (STATIC, CATEGORICAL) Item Perishable (STATIC, CATEGORICAL)

Historical Numeric – 6, shape = { B, 90, 1 }

Log Unit Sales (OBSERVED, NUMERIC) Oil Price (OBSERVED, NUMERIC) Transactions (OBSERVED, NUMERIC) Day of Week (KNOWN, NUMERIC) Day of Month (KNOWN, NUMERIC) Month (KNOWN, NUMERIC)

Historical Categorical – 4, shape = { B, 90, 1 }

Open (KNOWN, CATEGORICAL) On Promotion (KNOWN, CATEGORICAL) Holiday Type (KNOWN, CATEGORICAL) Holiday Locale ID (KNOWN, CATEGORICAL)

Future Numeric – 3, shape = { B, 30, 1 }

Day of Week (KNOWN, NUMERIC) Day of Month (KNOWN, NUMERIC) Month (KNOWN, NUMERIC)

Future Categorical – 4, shape = { B, 30, 1 }

Open (KNOWN, CATEGORICAL) On Promotion (KNOWN, CATEGORICAL) Holiday Type (KNOWN, CATEGORICAL) Holiday Locale ID (KNOWN, CATEGORICAL)

This dataset is large and requires around 70 GB of RAM when fully loaded. However, once loaded into memory, training commences quickly for there is no lag caused by loading data from disk. To help alleviate data loading times, the dataset may be loaded into the MyCaffeTemporalDatabase which holds the data in memory until unloaded.

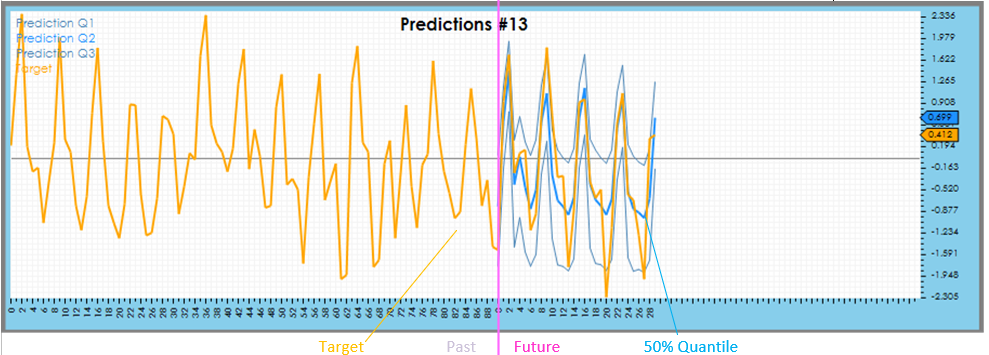

After training, the model predicts the unit sales for each product sold at various locations.

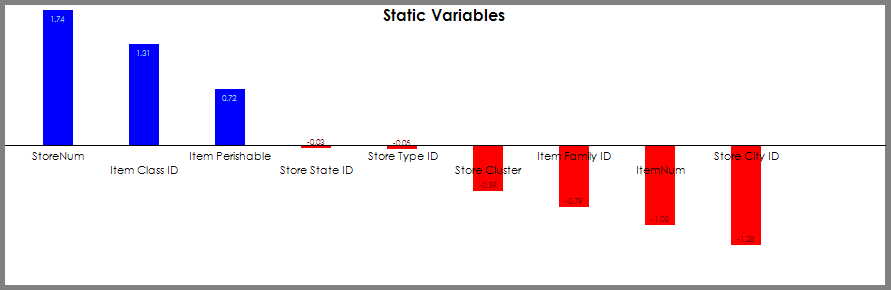

Further analysis shows how static variables impact the predictions.

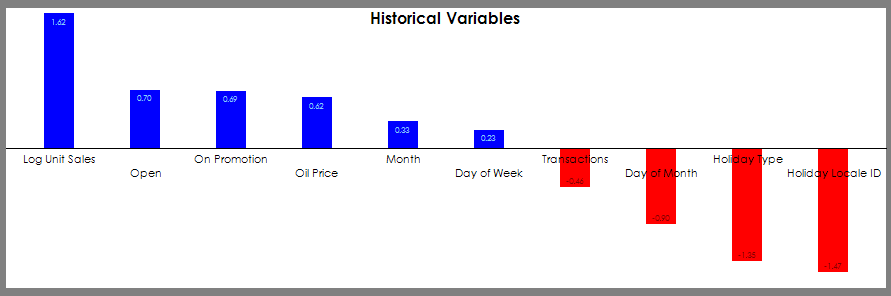

The impact of historical variables on the predictions is shown in the analytics.

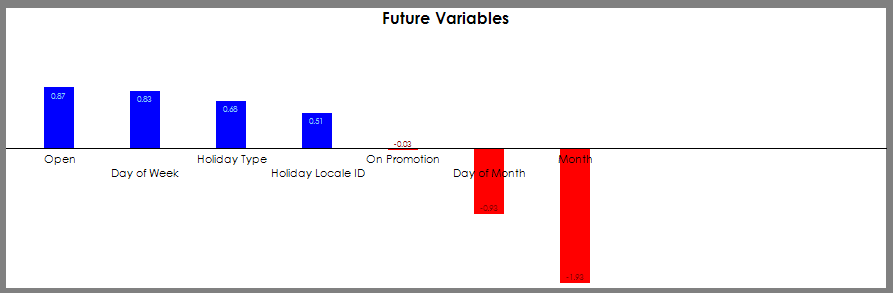

And the impact of the future variables on the predictions is included in the analytics provided by the model.

As shown above, the Store number, previous unit sales and day of the week are important data items that have a high impact on the predictions.

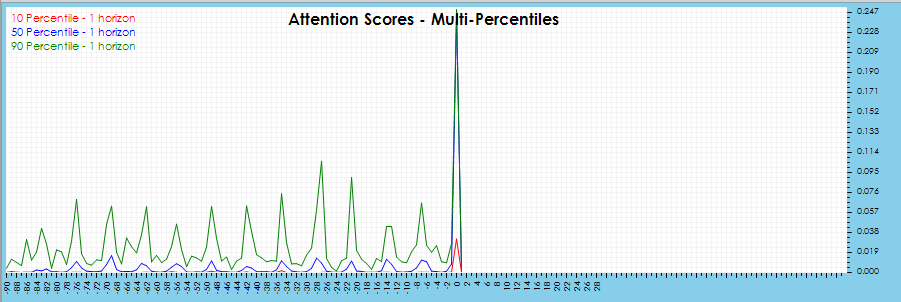

Visualizing the attention analytics confirms a strong 7-day periodic behavior which further confirms the importance of the day of week, likely due to stronger sales on weekends.

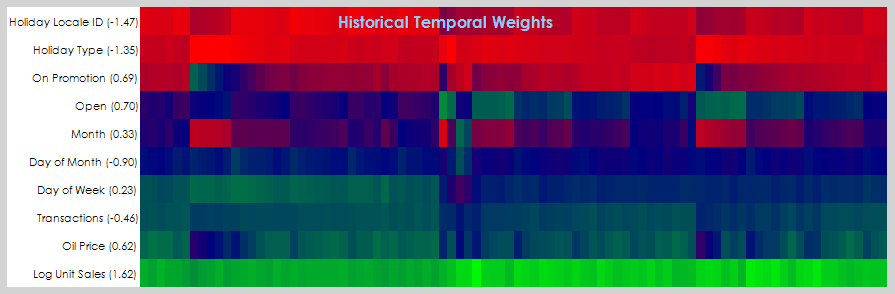

Analyzing each data stream over time shows how these variables impact the predictions over time.

If you would like to try out these powerful models in MyCaffe, check out the Temporal Fusion Transformer tutorial.

New Features

The following new features have been added to this release.

- CUDA 11.8.0.522/cuDNN 8.8.0.121/nvapi 515/driver 531.14

- Windows 11 22H2

- Windows 10 22H2, OS Build 19045.3448, SDK 10.0.19041.0

- Added SQL support to TFT.electricity Dataset Creator

- Added SQL support to TFT.traffic Dataset Creator

- Added SQL support to TFT.volatility Dataset Creator

- Added Liquid Neural Net support with new CfcLayer, CfcUnitLayer and LtcUnitLayer.

- Added Temporal Database support with the new MyCaffeTemporalDatabase.

- Added weight visualization for RNN type networks.

- Added background loading to MyCaffeTemporalDatabase.

- Added Temporal Database Windows Service support.

Bug Fixes

The following bug fixes have been made in this release.

- Fixed bug, now correct image database used reported in Test Application.

- Fixed bug in CategoricalTransformationLayer regarding resizing.

- Fixed bug when image mean missing in DataLayer.

- Fixed bug in auto test related to logging access.

- Fixed bug in CategoricalTransformationLayer spatial dim.

- Fixed bug in NumericTransformationLayer spatial dim.

- Fixed bugs causing errors in ChannelEmbeddingLayer.

- Fixed bug where all gateaddnorm parameters are now available.

- Fixed bug in NUGET package where TFT layer modules are now included.

For other great examples, including using Single Shot Multi-Box to detect gas leaks, or using Neural Style Transfer to create innovative and unique art, or creating Shakespeare sonnets with a CharNet, or beating PONG with Reinforcement Learning, check out the Examples page.

Happy Deep Learning with MyCaffe!

[1] Liquid Time-constant Networks, by Ramin Hasani, Mathias Lechner, Alexander Amini, Daniela Rus, Radu Grosu, 2020, arXiv:2006.04439

[2] GitHub: Closed-form Continuous-time Models, by Ramin Hasani, 2021, GitHub

[3] Closed-form Continuous-time Neural Models, by Ramin Hasani, Mathias Lechner, Alexander Amini, Lucas Liebenwein, Aaron Ray, Max Tschaikowski, Gerald Teschl, Daniela Rus, 2021, arXiv:2106.13898

[4] Closed-form continuous-time neural networks, by Ramin Hasani, Mathias Lechner, Alexander Amini, Lucas Liebenwein, Aaron Ray, Max Tschaikowski, Gerald Teschl, Daniela Rus, 2022, nature machine intelligence

[5] Bryan Lim, Sercan O. Arik, Nicolas Loeff, and Tomas Pfister, Temporal Fusion Transformers for Interpretable Multi-horizon Time Series Prediction, 2019, arXiv:1912.09360.

[6] GitHub: PlaytikaOSS/tft-torch, by Playtika Research, 2021, GitHub